Exploring bias, explainability and accountability of AI and deep learning challenges in the railway sector

Posted: 14 May 2019 | Alejandro Saucedo | No comments yet

The railway sector is facing new risks. The recent increase in adoption of artificial intelligence (AI) and deep learning systems have introduced new challenges. Intelligent systems that learn from data have been proven to bring huge value through insights and automation at scale, and hence adoption will only continue to increase. The railway sector needs to adapt. Fortunately, it is in a good position to do so. But before proceeding, Alejandro Saucedo, Chief Scientist at the Institute for Ethical AI & Machine Learning, explains we should first obtain a high-level understanding of what this technology brings, and subsequently the new challenges.

Deep learning

AI and deep learning brings a significantly higher level of human automation at scale – whether it is automation of insight analysis or automation of manual and repetitive human tasks. Thanks to its ability to learn from significantly larger datasets, it is possible to build systems that can outperform humans in isolated vertical tasks. We have seen AI outperform humans in tasks such as predictive maintenance, document classification, passenger counting and beyond.

Challenges

Recently however, there has been several big challenges that have been brought to light with deep learning. Three of the main challenges I want to address here are:

- Bias

- Explainability

- Accountability.

These three areas are of critical importance in order to ensure the safe and efficient development and deployment of deep learning systems in the sector. For simplicity, we will explain these challenges with an example: We will assume we are automating the customer support process using a deep learning chatbot system.

Bias

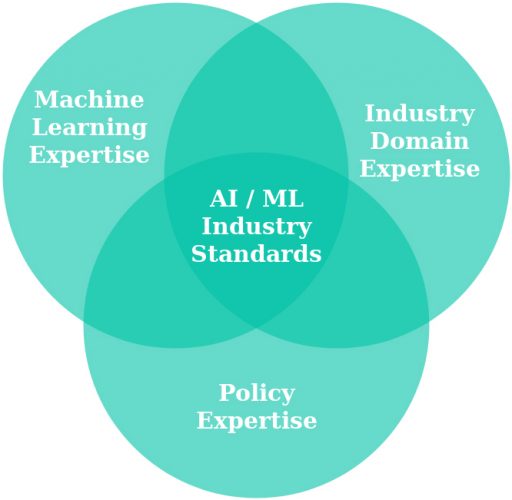

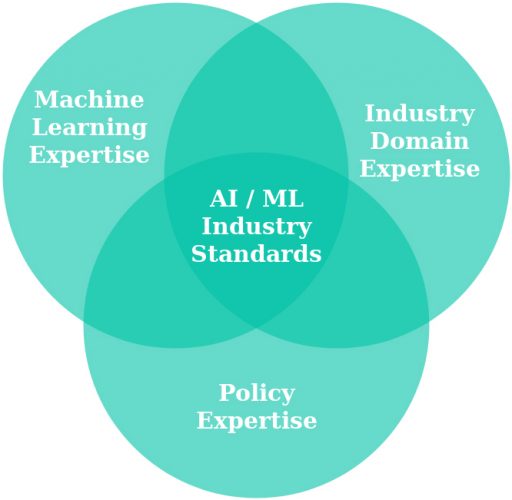

Collaboration between machine learning experts, policy experts and industry domain experts will enable large-scale deployments of deep learning systems to flourish

For starters, let’s assume we have been able to train our deep learning chatbot system by giving it a large number of data examples that have been gathered. Once it learns from the data, it can perform the desired task of answering our customers’ questions. The challenge is that throughout the development of this deep learning model, multiple unnoticed biases were introduced. Whether it is due to incorrect examples, or inherently biased datasets, the system will always carry an inherent bias. Because of this, it will be our objective to make sure that we mitigate large negative effects of undesired bias. If we don’t do this, we will end up with undesired behaviours that may have large negative impacts on the business. There has been high profile cases where undesired bias has gone unnoticed such as Microsoft’s ‘racist’ chatbot1, and Amazon’s ‘sexist’ recruitment platform2, both which accidentally showed negative behaviour due to unnoticed bias.

Fortunately, it is possible to identify and mitigate an undesired bias to a reasonable level by ensuring the right metrics are being used for the evaluation of the system, and the right monitoring is put in place to ensure performance is achieved. The Institute of Electrical and Electronics Engineers (IEEE) is currently leading a standard in algorithmic bias considerations3 which will allow for formal standard definitions on bias in deep learning.

Explainability

Once our deep learning chatbot system is deployed, our journey is far from finished – it has only just begun. In the situation where the system makes an important or unexpected decision, there is often a requirement to explain why the system made that decision. With deep learning systems this becomes a challenge as they often consist of highly complex black boxes. This has become an industry problem, and has been challenged several times. A high-profile example was a deep learning system deployed for automating court sentencing, but its predictions could not be interpreted4. In critical environments such as some use-cases in the railway sector, this is not an option.

It is possible to introduce a justifiable level of explainability in deep learning by using a combination of subject domain expertise and machine learning analysis. This often results in a trade-off between accuracy and explainability, but it is a trade-off that may be necessary. The Institute for Ethical AI & Machine Learning5 recently released a toolbox to support machine learning teams to analyse black box deep learning systems6.

Accountability

Throughout the design, development and operation of our deep learning chatbot system, there will be a significant number of stakeholders involved. In the case that something goes wrong, it is critical for accountability to be in place. Deep learning systems however introduce a new paradigm when it comes to accountability, because there are numerous layers of decision making involved in the creation of the system. If something goes wrong, is the data labeller accountable? Or is it the engineer that deployed it? Or the delivery manager that operates it? These questions are often made more complex due to the highly complex nature of the deep learning systems themselves.

However, it is possible to adapt traditional governance frameworks to fit the purpose of deep learning systems and ensure the right level of accountability is in place during the design, development and operation of these systems. The AI Procurement framework7 is an initial attempt to provide an accountability and governance framework through a machine learning maturity model criteria8.

The right standards for AI and deep learning need to be set in place in order to raise the bar for safety, quality and efficiency in the railway sector

The solution: Collaboration

The right standards for AI and deep learning need to be set in place in order to raise the bar for safety, quality and efficiency in the railway sector. Fortunately, the sector has everything available in order to build the right standards. However, this will require key collaboration between machine learning experts, policy experts and industry domain experts. Only through the collaboration of all these key stakeholders will it be possible to develop the framework for large-scale deployments of deep learning systems to flourish in highly critical environments.

THE INSTITUTE FOR ETHICAL AI & MACHINE LEARNING

The Institute for Ethical AI & Machine Learning5 is a UK-based research centre that carries out world-class, highly-technical research into responsible machine learning systems. The Institute was formed by cross functional teams of machine learning engineers, data scientists, industry experts, policymakers and professors in STEM, Humanities and Social Sciences. The Institute’s vision is to minimise risks of AI and unlock its full power through frameworks that ensure the ethical and conscious development of AI projects across all industries.

References:

- www.theguardian.com/ technology/2016/mar/30/ microsoft-racist-sexist-chatbottwitter- drugs

- www.independent.co.uk/ life-style/gadgets-and-tech/ amazon-ai-sexist-recruitmenttool- algorithm-a8579161.html

- http://sites.ieee.org/ sagroups-7003

- www.wired.com/2017/04/co urts-using-ai-sentence-crimin als-must-stop-now

- https://ethical.institute

- https://github.com/ethicalml/xai

- https://ethical.institute/rfx.html

- https://ethical.institute/ mlmm.html

Biography

Alejandro Saucedo is the Chief Scientist at the Institute for Ethical AI & Machine Learning, where he leads highly technical research on machine learning explainability, bias evaluation, reproducibility and responsible design. With over 10 years of software development experience, Alejandro has held technical leadership positions across hyper-growth scale-ups and tech giants including Eigen Technologies, Bloomberg LP and Hack Partners. He has a strong track record building departments of machine learning engineers from scratch and leading the delivery of large-scale machine learning systems across the financial, insurance, legal, transport, manufacturing and construction sectors (in Europe, U.S. and Latin America).

Issue

Related topics

Big Data, Digitalisation, Infrastructure Developments, Internet of Things (IoT), Technology & Software